Earlier this year at Devcom, I had the honour of delivering my first-ever live presentation to a passionate audience of game developers and community management professionals.

Throughout my 15-year career in video games, I've noticed a significant gap between what the community management team sees and what the development team understands about community. While building the first Trust & Safety team at Keywords Studios, one of my primary goals has been to bridge this gap by working closely with developers right from the start of their product journey, so I was eager to share some lessons learned with the Devcom audience.

I’ve often said that the role of Trust & Safety is to serve as a “translator” between these two critical departments. Even the term "community" can be defined in different ways, depending on whether you ask a community manager or a dev. In my experience, developers tend to see community as mostly chatter, and maybe a place where a few disruptive players attempt to curse. But in the Trust & Safety world, we know that identifying curse words is only a small fraction of the job.

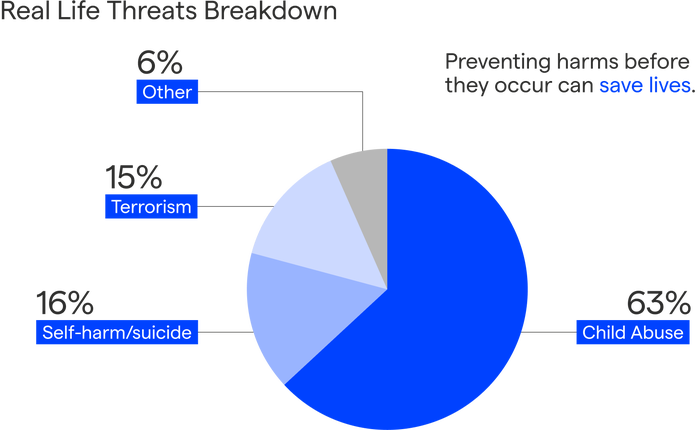

Bringing together community management teams and game developers is a crucial but often forgotten step in reducing truly dangerous, real-life harms like child abuse, self-harm/suicide, and extremism in online games.

Below, I’ve adapted my presentation to share some of the common questions asked by developers, and how I answer them.

1. "I'm a developer - why should I care about community health? How does it affect my KPIs?"

There are two significant reasons why.

First, for the greater good of the world.

Moderation today goes beyond the simple detection and removal of curse words from in-game chat. The biggest moderation challenge we face today is the steady and alarming increase in real-life threats. This is the “worst of the worst” content that harms players and their families in the real world and must be escalated to law enforcement in a timely and efficient manner.

By considering the impact of features before they are built without safety mechanisms, we have the power to significantly reduce response times and create safer environments for everyone involved.

The second reason you should prioritise community health? It’s good for business. The numbers speak for themselves.

Consider this: players spend 54% more on games they deem "non-toxic"(1). This means that fostering a healthy community not only creates a safer environment but also has a direct impact on your bottom line.

Additionally, 7 out of 10 players have avoided playing certain games due to the reputation of their communities(2). This demonstrates that players take community health seriously and are actively making decisions based on it.

And players are explicitly asking for devs to care about community: According to a report from Unity, 77% of multiplayer gamers believe that protecting players from abusive behavior should be a priority for game developers(3).

It's time for a collaboration between Trust & Safety professionals and developers to meet these expectations and create an enjoyable and respectful experience for all.

2. "Can't I let the community management team worry about moderation once the game is ready to launch?"

Time and time again, I've witnessed the dangerous gap that arises from this mindset.

Video game communities are put at risk when features are launched without consideration of their impact on health and safety. Moderation tools that could prevent online harm and real-life threats aren’t built until it’s too late and the game’s reputation is damaged forever. To bridge this gap, it is vital for developers to communicate with Trust & Safety/community management during the development process.

This is why we advocate for Safety by Design, a development approach that prioritises player safety in game design and development. It aims to anticipate, detect, and eliminate online threats before they occur, by leveraging features including:

Player reform strategies such as in-game warnings and alerts when players attempt to share harmful content

Social engineering strategies that promote positive play and interactions

Intuitive and comprehensive in-game reporting tools

With these features, we can proactively prevent many online harms and significantly reduce response times for the most serious and dangerous real-life threats.

image via Keywords

Before we go any further, I should make a distinction between in-game moderation tools and classification technology/AI models that categorise user-generated content according to risk. As a developer, you should be responsible for building in-game features, because you know your game best. Classification tech and AI models are a different beast entirely (more on that later).

3. "… please don't tell me that I need to care about content moderation legislation... you're going to, aren’t you?"

First, a caveat: I’m not a lawyer, so I cannot offer legal advice. You should consult with your in-house counsel about the particulars of content moderation legislation.

What I can tell you is this: Regulations are no longer limited to children's games. There are now legal requirements that make content moderation non-negotiable, and the fines associated with non-compliance can be substantial.

The EU Digital Services Act (DSA) is a prime example of legislation with far-reaching impact. It introduces rules on liability for disinformation, illegal and violent content, and more. It also includes the possibility of fines of up to 6% of a company's global turnover. Just like GDPR, the DSA applies to all companies conducting business within the EU, regardless of their physical presence.

As a developer, it is essential to mitigate risks and proactively prevent harm by implementing safety features from the early stages of development. This way, you can ensure that nothing is overlooked, and you won't have to go back and break your code to make the necessary changes.

You will not only protect your players but also safeguard your game and your company from legal troubles.

4. "Why do we need tech – can't we just hire a bunch of people to moderate?"

Humans will always play a vital role in content moderation. Only humans can make the challenging, nuanced decisions that are crucial to a thoughtful Trust & Safety strategy that places an equal importance on player safety and player expressivity.

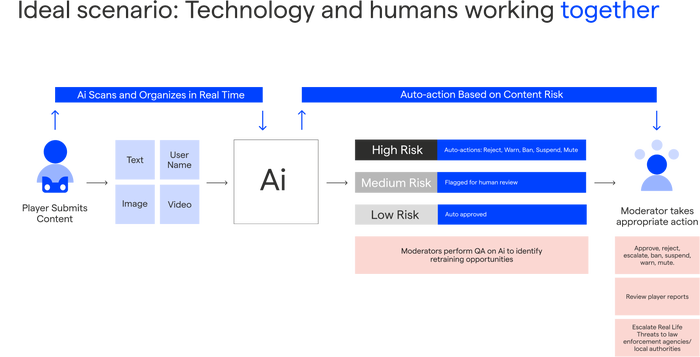

But the sheer volume and variety of user-generated content in games, from text to images, videos, and voice, make it impossible for humans to manually moderate it all. This is where AI and automated tools come into play.

In an ideal scenario, moderation technology and human moderators work in tandem. AI-driven tools can handle the scale and volume of UGC, while superhero human moderators provide the necessary context and judgment to make nuanced decisions.

image via Keywords

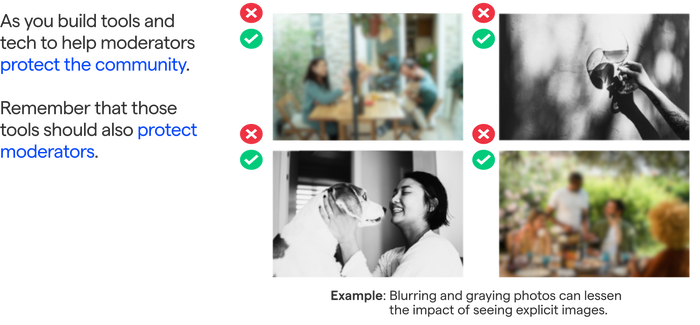

On another, equally important note: AI tools are also key to protecting moderators from unnecessary exposure to explicit content.

And there is yet another reason the community management/Trust & Safety needs to be involved in game development. As you build in-game moderation tools, it’s critical that we help inform the types of data that is displayed within the moderation dashboard. This way, moderators can have access to the necessary information in order to escalate their actions to law enforcement around the world.

image via Keywords

5. "Should I buy a classification solution or build it in-house? How complicated can it be to build our own tool internally?"

Over the years, this is the question I’ve been asked the most. The simple answer is that it's not worth the time and money you'll have to invest to build your own custom AI models.

Attempting to build your own classification technology will set your project back months, if not years. Keeping pace with the ever-evolving landscape of language and staying effective at combating new forms of hate speech, language manipulations, pop culture trends, and geopolitical shifts is a 24/7 job that demands constant updates. And you’ll have to do all this in multiple languages, while accounting for regional dialect and localized slang.

The stakes are too high to try to do it yourself.

The good news is that there are excellent third-party solutions on the market today. These organisations are focused solely on fine-tuning their models, staying up to date on trends, and detecting the most insidious and dangerous real-life threats, including child luring and radicalism.

Why community health and moderation should matter to game developers

On the surface, community and moderation may seem like features that should only be implemented once your game is complete. Fostering a safe and healthy community may seem like a job for the Trust & Safety team.

But I hope it’s clear now that everyone involved in game development is responsible for player safety.

In fact, your work as a developer is critical to ensuring players' safety.

By developing games with a Safety by Design mindset, building proactive moderation features, and working hand in hand with community management, you can play a vital role in preventing and detecting harm online — and in real-life, too.

Now is the time to bridge the gap between our teams, and work together to build a better, safer world for all players.

About Keywords Studios

Keywords Studios is an international provider of creative and technology-enabled solutions to the global video games and entertainment industries. Established in 1998, and now with over 70 facilities in 26 countries strategically located in Asia, Australia, the Americas, and Europe, it provides services across the entire content development life cycle through its Create, Globalize and Engage business divisions.

Keywords Studios has a strong market position, providing services to 24 of the top 25 most prominent games companies. Across the games and entertainment industry, clients include Activision Blizzard, Bandai Namco, Bethesda, Electronic Arts, Epic Games, Konami, Microsoft, Netflix, Riot Games, Square Enix, Supercell, TakeTwo, Tencent and Ubisoft. Recent titles worked on include Diablo IV, Hogwarts Legacy, Elden Ring, Fortnite, Valorant, League of Legends, Clash Royale and Doom Eternal. Keywords Studios is listed on AIM, the London Stock Exchange regulated market (KWS.L).

References

Constance Steinkuehler. 2023. Games as Social Platforms. ACM Games 1, 1, Article 7 (March 2023), 2 pages. https://doi.org/10.1145/3582930

Toxic Gamers are Alienating your Core Demographic: The Business Case for Community Management. A White Paper by Take This. https://www.takethis.org/wp-content/uploads/2023/08/ToxicGamersBottomLineReport_TakeThis.pdf

2023 Toxicity in Multiplayer Games Report - https://create.unity.com/toxicity-report

Read more about:

Sponsor Resource CenterAbout the Author(s)

You May Also Like